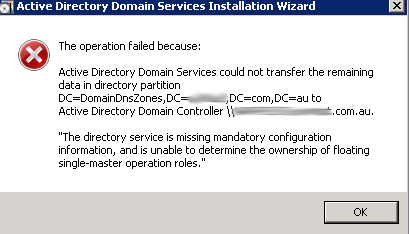

I was recently demoting a Domain Controller as I had upgraded to Server 2016, when I came across the following error message:

What this means is that the fSMORoleOwner is most likely pointing to the server that you're trying to decommission, and of course you can't do this. So what needs to be done is to update this to point to another DC that's active.

First, to confirm this, you will need to go into ADSI Edit. Connect to the following:

Once you've done this, open up DC=Infrastructure:

Look for fSMORoleOwner and check the server name that is referenced here:

In this case, it's showing my new DC, however originally it was showing the DC that I was wanting to decommission. In order to resolve this, I used the following script:

const ADS_NAME_INITTYPE_GC = 3

const ADS_NAME_TYPE_1779 = 1

const ADS_NAME_TYPE_CANONICAL = 2

set inArgs = WScript.Arguments

if (inArgs.Count = 1) then

' Assume the command line argument is the NDNC (in DN form) to use.

NdncDN = inArgs(0)

Else

Wscript.StdOut.Write "usage: cscript fixfsmo.vbs NdncDN"

End if

if (NdncDN <> "") then

' Convert the DN form of the NDNC into DNS dotted form.

Set objTranslator = CreateObject("NameTranslate")

objTranslator.Init ADS_NAME_INITTYPE_GC, ""

objTranslator.Set ADS_NAME_TYPE_1779, NdncDN

strDomainDNS = objTranslator.Get(ADS_NAME_TYPE_CANONICAL)

strDomainDNS = Left(strDomainDNS, len(strDomainDNS)-1)

Wscript.Echo "DNS name: " & strDomainDNS

' Find a domain controller that hosts this NDNC and that is online.

set objRootDSE = GetObject("LDAP://" & strDomainDNS & "/RootDSE")

strDnsHostName = objRootDSE.Get("dnsHostName")

strDsServiceName = objRootDSE.Get("dsServiceName")

Wscript.Echo "Using DC " & strDnsHostName

' Get the current infrastructure fsmo.

strInfraDN = "CN=Infrastructure," & NdncDN

set objInfra = GetObject("LDAP://" & strInfraDN)

Wscript.Echo "infra fsmo is " & objInfra.fsmoroleowner

' If the current fsmo holder is deleted, set the fsmo holder to this domain controller.

if (InStr(objInfra.fsmoroleowner, "\0ADEL:") > 0) then

' Set the fsmo holder to this domain controller.

objInfra.Put "fSMORoleOwner", strDsServiceName

objInfra.SetInfo

' Read the fsmo holder back.

set objInfra = GetObject("LDAP://" & strInfraDN)

Wscript.Echo "infra fsmo changed to:" & objInfra.fsmoroleowner

End if

End if

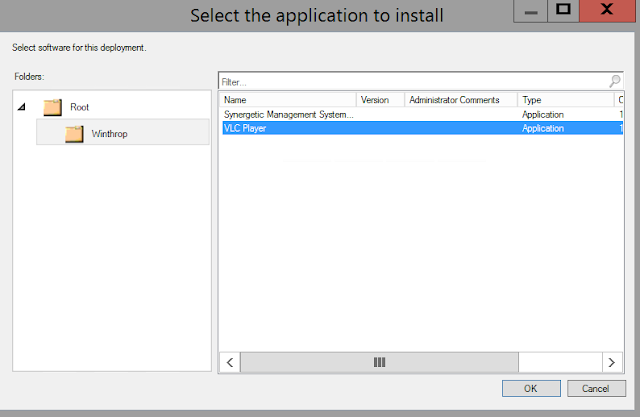

Create a new VBS file with the above script, and called it "FixFSMO.vbs". Copy this to the desktop of a DC that's active and then run the following command:

cscript fixfsmo.vbs DC=DomainDnsZones,DC=contoso,DC=com

You will also need to run the same command, but for ForestDNSZone.

cscript fixfsmo.vbs DC=ForestDNSZones,DC=contoso,DC=com

Once you've done this, check the ADSI object again and you will notice this has now updated to an active DC. Let this sit for 15 minutes or so to ensure that it syncs to all DCs, and then you should be able to re-run the DCPROMO to demote the Domain Controller.